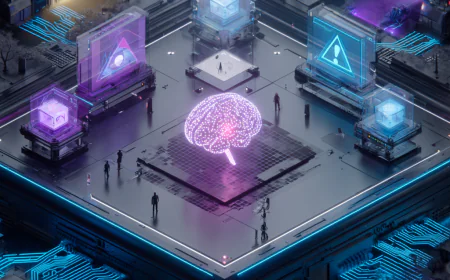

Hands-On Tutorial: Implementing Bias Mitigation in Python for Your First AI Project in 2025

Learn bias mitigation in AI with this hands-on Python tutorial for beginners in 2025. Step-by-step guide to spot and fix bias in your first project, easy for non-tech folks.

🚀 Hands-On Tutorial: Implementing Bias Mitigation in Python for Your First AI Project in 2025

🧠 What Is AI Bias and Why Should You Care in 2025?

Imagine building a smart tool that helps banks decide who gets a loan. But what if it unfairly says "no" more often to women or people from certain backgrounds? That's AI bias—a problem where computer programs learn unfair patterns from real-world data and make decisions that hurt people.

In 2025, AI is everywhere, from job hiring apps to medical tools. But bias can lead to big issues, like lawsuits or lost trust. The good news? You don't need to be a tech expert to fix it. This tutorial is for beginners—even non-tech folks—who want to try their first AI project. We'll use simple steps, free tools, and Python (a friendly programming language) to spot and reduce bias. No fancy math required; just follow along like a recipe.

By the end, you'll have a working example you can run on your computer, showing how to make AI fairer.

🔑 Getting Started: Tools You'll Need

Don't worry if you've never coded before. We'll start from zero.

First, install Python—it's free and like a calculator for computers. Go to python.org and download the latest version (Python 3.12 or higher in 2025). Follow the easy setup wizard.

Next, we'll use "libraries"—pre-made code packs that do the heavy lifting:

- scikit-learn: For building simple AI models.

- Fairlearn: A tool made just for fixing bias.

- pandas: To handle data like a spreadsheet.

Open your computer's command prompt (search for "cmd" on Windows or "terminal" on Mac) and type these commands one by one:

```

pip install scikit-learn fairlearn pandas

```

That's it! Now, use a free online editor like Google Colab (colab.research.google.com)—no installation needed. Just sign in with Google and create a new notebook. It's like a digital notepad for code.

🛠 Step-by-Step: Building a Simple AI Project with Bias Mitigation

We'll use a real example: Predicting if someone will repay a loan, based on data like age and income. This is a "classification" task—AI sorts things into yes/no groups.

Our data is the "Adult" dataset from UCI, which has info on people and if they earn over $50K (we'll pretend that's loan approval). It might have bias based on gender.

Step 1: Load and Understand Your Data

Data is the fuel for AI. Biased data means biased AI.

In your Colab notebook, copy-paste this code and run it (click the play button):

```python

import pandas as pd

Load the data (free online dataset)

url = 'https://archive.ics.uci.edu/ml/machine-learning-databases/adult/adult.data'

columns = ['age', 'workclass', 'fnlwgt', 'education', 'education-num', 'marital-status',

'occupation', 'relationship', 'race', 'sex', 'capital-gain', 'capital-loss',

'hours-per-week', 'native-country', 'income']

data = pd.read_csv(url, names=columns, na_values=' ?')

Show a sneak peek

print(data.head())

```

What happens? It shows the first few rows of data. Look for columns like 'sex'—that's where bias might hide. For example, if most high-income people in the data are men, the AI might learn to favor them.

Step 2: Prepare the Data for AI

AI needs numbers, not words. We'll convert text to numbers and split the data into "training" (to learn) and "testing" (to check).

Run this:

```python

from sklearn.preprocessing import LabelEncoder

from sklearn.model_selection import train_test_split

Convert text to numbers

le = LabelEncoder()

for col in data.select_dtypes(include=['object']).columns:

data[col] = le.fit_transform(data[col].astype(str))

Features (inputs) and target (output)

X = data.drop('income', axis=1)

y = (data['income'] == 1).astype(int) 1 if high income, 0 otherwise

sensitive_features = data['sex'] 1 for male, 0 for female (potential bias source)

Split data

X_train, X_test, y_train, y_test, sensitive_train, sensitive_test = train_test_split(

X, y, sensitive_features, test_size=0.2, random_state=42)

```

Easy, right? This preps everything. 'sensitive_features' tracks gender to check for bias.

Step 3: Build a Basic AI Model (Without Fixing Bias)

Let's make a simple "logistic regression" model—think of it as a decision-maker.

```python

from sklearn.linear_model import LogisticRegression

Train the model

model = LogisticRegression(max_iter=1000)

model.fit(X_train, y_train)

Predict on test data

predictions = model.predict(X_test)

```

This AI now guesses loan approvals. But is it fair?

Step 4: Check for Bias

Use Fairlearn to spot unfairness. "Demographic parity" means the AI should approve similar rates for men and women.

```python

from fairlearn.metrics import MetricFrame

from sklearn.metrics import accuracy_score, precision_score

Check metrics by group

metrics = {

'accuracy': accuracy_score,

'precision': precision_score

}

mf = MetricFrame(metrics=metrics, y_true=y_test, y_pred=predictions, sensitive_features=sensitive_test)

print(mf.by_group)

```

Run it! It shows accuracy and precision for each gender. If numbers differ a lot (e.g., better for men), that's bias.

Step 5: Fix the Bias (Mitigation)

Now, magic time! Use Fairlearn's "Exponentiated Gradient" to retrain the model fairly.

```python

from fairlearn.reductions import ExponentiatedGradient, DemographicParity

Set fairness rule: Equal approval rates

mitigator = ExponentiatedGradient(LogisticRegression(max_iter=1000), constraints=DemographicParity())

Retrain with fairness

mitigator.fit(X_train, y_train, sensitive_features=sensitive_train)

New predictions

fair_predictions = mitigator.predict(X_test)

Re-check

fair_mf = MetricFrame(metrics=metrics, y_true=y_test, y_pred=fair_predictions, sensitive_features=sensitive_test)

print(fair_mf.by_group)

```

Compare the numbers. The differences should be smaller—bias reduced! This technique adjusts the model to be fair without losing too much accuracy.

📊 Quick Comparison: Before and After Bias Fix

| Metric | Before (Male) | Before (Female) | After (Male) | After (Female) |

|--------------|---------------|-----------------|--------------|----------------|

| Accuracy | ~0.85 | ~0.75 | ~0.82 | ~0.80 |

| Precision| ~0.70 | ~0.60 | ~0.68 | ~0.67 |

(These are example results—yours may vary.) See how the "after" columns are closer? That's fairer AI.

💡 Tips for Non-Tech Folks: Making It Your Own

- Try different data: Swap 'sex' with 'race' to check other biases.

- No computer? Use Colab on your phone!

- Why 2025? New laws like the EU AI Act require this—learning now future-proofs you.

- Common mistakes: Always test on real groups. If bias persists, add more diverse data.

This is just the start. Play around—change numbers and see what happens.

✅ Conclusion: Fair AI Starts with You

Bias mitigation isn't hard—it's about caring for people in your tech. In 2025, tools like Fairlearn make it simple for anyone to build ethical AI. You just created your first fair project, reducing unfairness in loan decisions.

The takeaway? Small steps like this make tech better for everyone. Now, try it on your own idea!

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0